In How to run Wine (graphics-accelerated) in an LXD container on Ubuntu we had a quick look into how to run GUI programs in an LXD (Lex-Dee) container, and have the output appear on the local X11 server (your Ubuntu desktop).

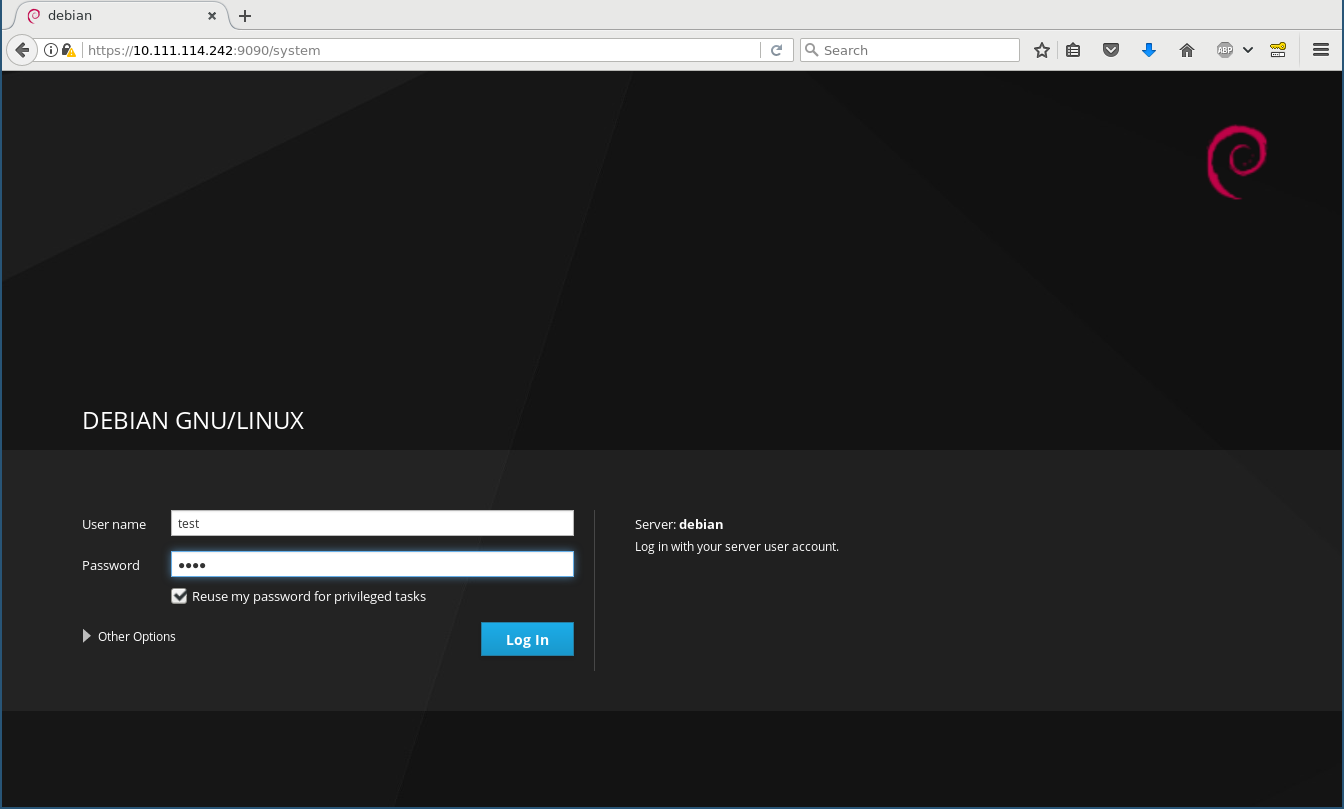

In this post, we are going to see how to

- generalize the instructions in order to run most GUI apps in a LXD container but appear on your desktop

- have accelerated graphics support and audio

- test with Firefox, Chromium and Chrome

- create shortcuts to easily launch those apps

The benefits in running GUI apps in a LXD container are

- clear separation of the installation data and settings, from what we have on our desktop

- ability to create a snapshot of this container, save, rollback, delete, recreate; all these in a few seconds or less

- does not mess up your installed package list (for example, all those i386 packages for Wine, Google Earth)

- ability to create an image of such a perfect container, publish, and have others launch in a few clicks

What we are doing today is similar to having a Virtualbox/VMWare VM and running a Linux distribution in it. Let’s compare,

- It is similar to the Virtualbox Seamless Mode or the VMWare Unity mode

- A VM virtualizes a whole machine and has to do a lot of work in order to provide somewhat good graphics acceleration

- With a container, we directly reuse the graphics card and get graphics acceleration

- The specific set up we show today, can potential allow a container app to interact with the desktop apps (TODO: show desktop isolation in future post)

Browsers have started having containers and specifically in-browser containers. It shows a trend towards containers in general, it is browser-specific and is dictated by usability (passwords, form and search data are shared between the containers).

In the following, our desktop computer will called the host, and the LXD container as the container.

Setting up LXD

LXD is supported in Ubuntu and derivatives, as well as other distributions. When you initially set up LXD, you select where to store the containers. See LXD 2.0: Installing and configuring LXD [2/12] about your options. Ideally, if you select to pre-allocate disk space or use a partition, select at least 15GB but preferably more.

If you plan to play games, increase the space by the size of that game. For best results, select ZFS as the storage backend, and place the space on an SSD disk. Also Trying out LXD containers on our Ubuntu may help.

Creating the LXD container

Let’s create the new container for LXD. We are going to call it guiapps, and install Ubuntu 16.04 in it. There are options for other Ubuntu versions, and even other distributions.

$ lxc launch ubuntu:x guiapps

Creating guiapps

Starting guiapps

$ lxc list

+---------------+---------+--------------------+--------+------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+---------------+---------+--------------------+--------+------------+-----------+

| guiapps | RUNNING | 10.0.185.204(eth0) | | PERSISTENT | 0 |

+---------------+---------+--------------------+--------+------------+-----------+

$

We created and started an Ubuntu 16.04 (ubuntu:x) container, called guiapps.

Let’s also install our initial testing applications. The first one is xclock, the simplest X11 GUI app. The second is glxinfo, that shows details about graphics acceleration. The third, glxgears, a minimal graphics-accelerated application. The fourth is speaker-test, to test for audio. We will know that our set up works, if all three xclock, glxinfo, glxgears and speaker-test work in the container!

$ lxc exec guiapps -- sudo --login --user ubuntu

ubuntu@guiapps:~$ sudo apt update

ubuntu@guiapps:~$ sudo apt install x11-apps

ubuntu@guiapps:~$ sudo apt install mesa-utils

ubuntu@guiapps:~$ sudo apt install alsa-utils

ubuntu@guiapps:~$ exit $

We execute a login shell in the guiapps container as user ubuntu, the default non-root user account in all Ubuntu LXD images. Other distribution images probably have another default non-root user account.

Then, we run apt update in order to update the package list and be able to install the subsequent three packages that provide xclock, glxinfo and glxgears, and speaker-test (or aplay). Finally, we exit the container.

Mapping the user ID of the host to the container (PREREQUISITE)

In the following steps we will be sharing files from the host (our desktop) to the container. There is the issue of what user ID will appear in the container for those shared files.

First, we run on the host (only once) the following command (source),

$ echo "root:$UID:1" | sudo tee -a /etc/subuid /etc/subgid

[sudo] password for myusername:

root:1000:1

$

The command appends a new entry in both the /etc/subuid and /etc/subgid subordinate UID/GID files. It allows the LXD service (runs as root) to remap our user’s ID ($UID, from the host) as requested.

Then, we specify that we want this feature in our guiapps LXD container, and restart the container for the change to take effect.

$ lxc config set guiapps raw.idmap "both $UID 1000"

$ lxc restart guiapps

$

This “both $UID 1000” syntax is a shortcut that means to map the $UID/$GID of our username in the host, to the default non-root username in the container (which should be 1000 for Ubuntu images, at least).

Configuring graphics and graphics acceleration

For graphics acceleration, we are going to use the host graphics card and graphics acceleration. By default, the applications that run in a container do not have access to the host system and cannot start GUI apps.

We need two things; let the container to access the GPU devices of the host, and make sure that there are no restrictions because of different user-ids.

Let’s attempt to run xclock in the container.

$ lxc exec guiapps -- sudo --login --user ubuntu

ubuntu@guiapps:~$ xclock

Error: Can't open display:

ubuntu@guiapps:~$ export DISPLAY=:0

ubuntu@guiapps:~$ xclock

Error: Can't open display: :0

ubuntu@guiapps:~$ exit

$

We run xclock in the container, and as expected it does not run because we did not indicate where to send the display. We set the DISPLAY environment variable to the default :0 (send to either a Unix socket or port 6000), which do not work either because we did not fully set them up yet. Let’s do that.

$ lxc config device add guiapps X0 disk path=/tmp/.X11-unix/X0 source=/tmp/.X11-unix/X0

$ lxc config device add guiapps Xauthority disk path=/home/ubuntu/.Xauthority source=/home/${USER}/.XauthorityWe give access to the Unix socket of the X server (/tmp/.X11-unix/X0) to the container, and make it available at the same exactly path inside the container. In this way, DISPLAY=:0 would allow the apps in the containers to access our host’s X server through the Unix socket.

Then, we repeat this task with the ~/.Xauthority file that resides in our home directory. This file is for access control, and simply makes our host X server to allow the access from applications inside that container.

How do we get hardware acceleration for the GPU to the container apps? There is a special device for that, and it’s gpu. The hardware acceleration for the graphics card is collectively enabled by running the following,

$ lxc config device add guiapps mygpu gpu

We add the gpu device, and we happen to name it mygpu (any name would suffice). The gpu device has been introduced in LXD 2.7, therefore if it is not found, you may have to upgrade your LXD according to https://launchpad.net/~ubuntu-lxc/+archive/ubuntu/lxd-stable Please leave a comment below if this was your case (mention what LXD version you have been running). Note that for Intel GPUs (my case), you may not need to add this device.

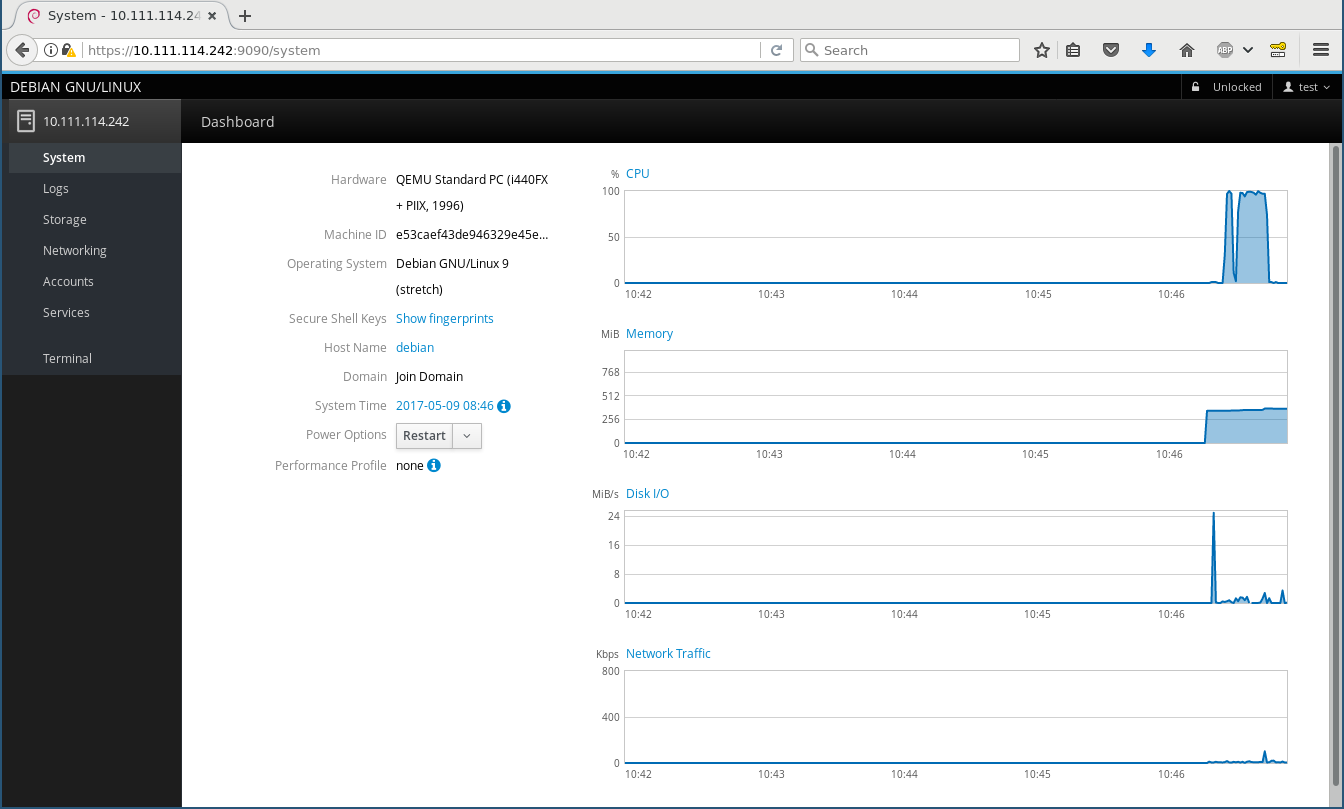

Let’s see what we got now.

$ lxc exec guiapps -- sudo --login --user ubuntu

ubuntu@guiapps:~$ export DISPLAY=:0

ubuntu@guiapps:~$ xclock

![]() ubuntu@guiapps:~$ glxinfo -B

name of display: :0

display: :0 screen: 0

direct rendering: Yes

Extended renderer info (GLX_MESA_query_renderer):

Vendor: Intel Open Source Technology Center (0x8086)

...

ubuntu@guiapps:~$ glxgears

ubuntu@guiapps:~$ glxinfo -B

name of display: :0

display: :0 screen: 0

direct rendering: Yes

Extended renderer info (GLX_MESA_query_renderer):

Vendor: Intel Open Source Technology Center (0x8086)

...

ubuntu@guiapps:~$ glxgears![]() Running synchronized to the vertical refresh. The framerate should be

approximately the same as the monitor refresh rate.

345 frames in 5.0 seconds = 68.783 FPS

309 frames in 5.0 seconds = 61.699 FPS

300 frames in 5.0 seconds = 60.000 FPS

^C

ubuntu@guiapps:~$ echo "export DISPLAY=:0">> ~/.profile

ubuntu@guiapps:~$ exit

$

Running synchronized to the vertical refresh. The framerate should be

approximately the same as the monitor refresh rate.

345 frames in 5.0 seconds = 68.783 FPS

309 frames in 5.0 seconds = 61.699 FPS

300 frames in 5.0 seconds = 60.000 FPS

^C

ubuntu@guiapps:~$ echo "export DISPLAY=:0">> ~/.profile

ubuntu@guiapps:~$ exit

$

Looks good, we are good to go! Note that we edited the ~/.profile file in order to set the $DISPLAY variable automatically whenever we connect to the container.

Configuring audio

The audio server in Ubuntu desktop is Pulseaudio, and Pulseaudio has a feature to allow authenticated access over the network. Just like the X11 server and what we did earlier. Let’s do this.

We install the paprefs (PulseAudio Preferences) package on the host.

$ sudo apt install paprefs

...

$ paprefs

![]()

This is the only option we need to enable (by default all other options are not check and can remain unchecked).

That is, under the Network Server tab, we tick Enable network access to local sound devices.

Then, just like with the X11 configuration, we need to deal with two things; the access to the Pulseaudio server of the host (either through a Unix socket or an IP address), and some way to get authorization to access the Pulseaudio server. Regarding the Unix socket of the Pulseaudio server, it is a bit of hit and miss (could not figure out how to use reliably), so we are going to use the IP address of the host (lxdbr0 interface).

First, the IP address of the host (that has Pulseaudio) is the IP of the lxdbr0 interface, or the default gateway (ip link show). Second, the authorization is provided through the cookie in the host at /home/${USER}/.config/pulse/cookie Let’s connect these to files inside the container.

$ lxc exec guiapps -- sudo --login --user ubuntu

ubuntu@guiapps:~$ echo export PULSE_SERVER="tcp:`ip route show 0/0 | awk '{print $3}'`">> ~/.profileThis command will automatically set the variable PULSE_SERVER to a value like tcp:10.0.185.1, which is the IP address of the host, for the lxdbr0 interface. The next time we log in to the container, PULSE_SERVER will be configured properly.

ubuntu@guiapps:~$ mkdir -p ~/.config/pulse/

ubuntu@guiapps:~$ echo export PULSE_COOKIE=/home/ubuntu/.config/pulse/cookie >> ~/.profile

ubuntu@guiapps:~$ exit

$ lxc config device add guiapps PACookie disk path=/home/ubuntu/.config/pulse/cookie source=/home/${USER}/.config/pulse/cookieNow, this is a tough cookie. By default, the Pulseaudio cookie is found at ~/.config/pulse/cookie. The directory tree ~/.config/pulse/ does not exist, and if we do not create it ourselves, then lxd config will autocreate it with the wrong ownership. So, we create it (mkdir -p), then add the correct PULSE_COOKIE line in the configuration file ~/.profile. Finally, we exit from the container and mount-bind the cookie from the host to the container. When we log in to the container again, the cookie variable will be correctly set!

Let’s test the audio!

$ lxc exec guiapps -- sudo --login --user ubuntu

ubuntu@pulseaudio:~$ speaker-test -c6 -twav

speaker-test 1.1.0

Playback device is default

Stream parameters are 48000Hz, S16_LE, 6 channels

WAV file(s)

Rate set to 48000Hz (requested 48000Hz)

Buffer size range from 32 to 349525

Period size range from 10 to 116509

Using max buffer size 349524

Periods = 4

was set period_size = 87381

was set buffer_size = 349524

0 - Front Left

4 - Center

1 - Front Right

3 - Rear Right

2 - Rear Left

5 - LFE

Time per period = 8.687798 ^C

ubuntu@pulseaudio:~$

If you do not have 6-channel audio output, you will hear audio on some of the channels only.

Let’s also test with an MP3 file, like that one from https://archive.org/details/testmp3testfile

ubuntu@pulseaudio:~$ sudo apt install mpg123

...

ubuntu@pulseaudio:~$ wget https://archive.org/download/testmp3testfile/mpthreetest.mp3

...

ubuntu@pulseaudio:~$ mplayer mpthreetest.mp3

MPlayer 1.2.1 (Debian), built with gcc-5.3.1 (C) 2000-2016 MPlayer Team

...

AO: [pulse] 44100Hz 2ch s16le (2 bytes per sample)

Video: no video

Starting playback...

A: 3.7 (03.7) of 12.0 (12.0) 0.2%

Exiting... (Quit)

ubuntu@pulseaudio:~$

All nice and loud!

Troubleshooting sound issues

AO: [pulse] Init failed: Connection refused

An application tries to connect to a PulseAudio server, but no PulseAudio server is found (either none autodetected, or the one we specified is not really there).

AO: [pulse] Init failed: Access denied

We specified a PulseAudio server, but we do not have access to connect to it. We need a valid cookie.

AO: [pulse] Init failed: Protocol error

You were trying as well to make the Unix socket work, but something was wrong. If you can make it work, write a comment below.

Testing with Firefox

Let’s test with Firefox!

ubuntu@guiapps:~$ sudo apt install firefox

...

ubuntu@guiapps:~$ firefox

Gtk-Message: Failed to load module "canberra-gtk-module"

We get a message that the GTK+ module is missing. Let’s close Firefox, install the module and start Firefox again.

ubuntu@guiapps:~$ sudo apt-get install libcanberra-gtk3-module

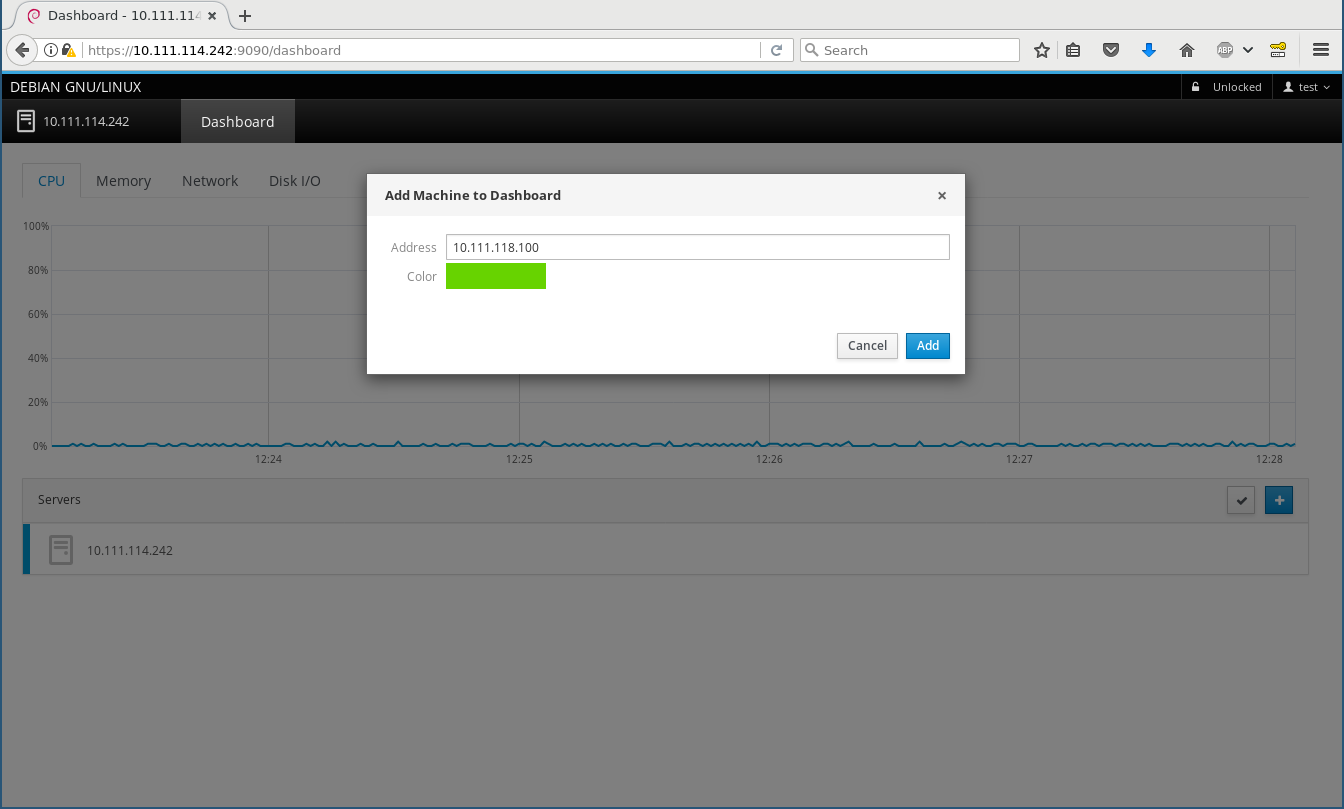

ubuntu@guiapps:~$ firefox

![]()

Here we are playing a Youtube music video at 1080p. It works as expected. The Firefox session is separated from the host’s Firefox.

Note that the theming is not exactly what you get with Ubuntu. This is due to the container being so lightweight that it does not have any theming support.

The screenshot may look a bit grainy; this is due to some plugin I use in WordPress that does too much compression.

You may notice that no menubar is showing. Just like with Windows, simply press the Alt key for a second, and the menu bar will appear.

Testing with Chromium

Let’s test with Chromium!

ubuntu@guiapps:~$ sudo apt install chromium-browser

ubuntu@guiapps:~$ chromium-browser

Gtk-Message: Failed to load module "canberra-gtk-module"

So, chromium-browser also needs a libcanberra package, and it’s the GTK+ 2 package.

ubuntu@guiapps:~$ sudo apt install libcanberra-gtk-module

ubuntu@guiapps:~$ chromium-browser

![]()

There is no menubar and there is no easy way to get to it. The menu on the top-right is available though.

Testing with Chrome

Let’s download Chrome, install it and launch it.

ubuntu@guiapps:~$ wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

...

ubuntu@guiapps:~$ sudo dpkg -i google-chrome-stable_current_amd64.deb

...

Errors were encountered while processing:

google-chrome-stable

ubuntu@guiapps:~$ sudo apt install -f

...

ubuntu@guiapps:~$ google-chrome

[11180:11945:0503/222317.923975:ERROR:object_proxy.cc(583)] Failed to call method: org.freedesktop.UPower.GetDisplayDevice: object_path= /org/freedesktop/UPower: org.freedesktop.DBus.Error.ServiceUnknown: The name org.freedesktop.UPower was not provided by any .service files

[11180:11945:0503/222317.924441:ERROR:object_proxy.cc(583)] Failed to call method: org.freedesktop.UPower.EnumerateDevices: object_path= /org/freedesktop/UPower: org.freedesktop.DBus.Error.ServiceUnknown: The name org.freedesktop.UPower was not provided by any .service files

^C

ubuntu@guiapps:~$ sudo apt install upower

ubuntu@guiapps:~$ google-chrome![]()

There are these two errors regarding UPower and they go away when we install the upower package.

Creating shortcuts to the container apps

If we want to run Firefox from the container, we can simply run

$ lxc exec guiapps -- sudo --login --user ubuntu firefox

and that’s it.

To make a shortcut, we create the following file on the host,

$ cat > ~/.local/share/applications/lxd-firefox.desktop[Desktop Entry]

Version=1.0

Name=Firefox in LXD

Comment=Access the Internet through an LXD container

Exec=/usr/bin/lxc exec guiapps -- sudo --login --user ubuntu firefox %U

Icon=/usr/share/icons/HighContrast/scalable/apps-extra/firefox-icon.svg

Type=Application

Categories=Network;WebBrowser;

^D

$ chmod +x ~/.local/share/applications/lxd-firefox.desktop

We need to make it executable so that it gets picked up and we can then run it by double-clicking.

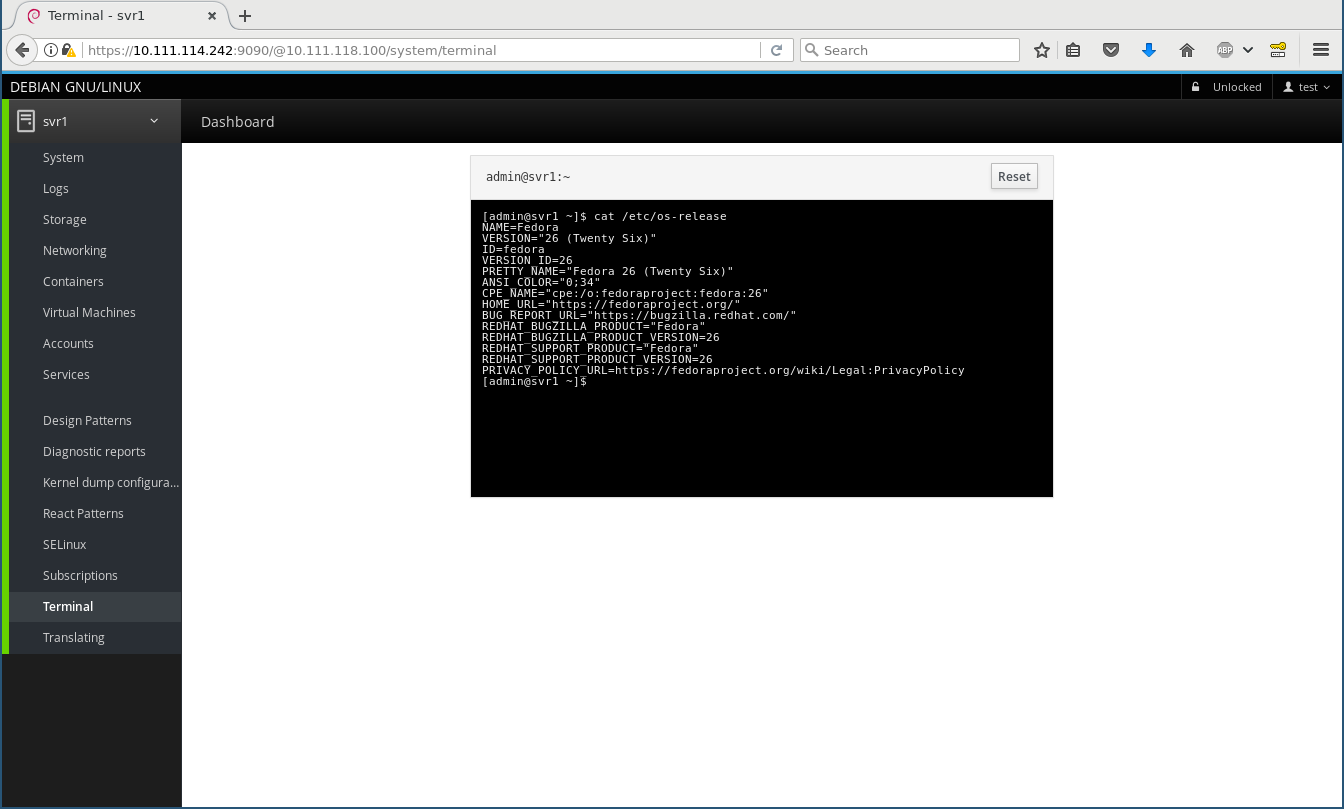

If it does not appear immediately in the Dash, use your File Manager to locate the directory ~/.local/share/applications/

![]()

This is how the icon looks like in a File Manager. The icon comes from the high-contrast set, which now I remember that it means just two colors ![🙁]()

![]()

Here is the app on the Launcher. Simply drag from the File Manager and drop to the Launcher in order to get the app at your fingertips.

I hope the tutorial was useful. We explain the commands in detail. In a future tutorial, we are going to try to figure out how to automate these!

ubuntu@wine-games:~$ glxinfo name of display: :0 display: :0 screen: 0 direct rendering: Yes server glx vendor string: SGI server glx version string: 1.4 ...

Except where otherwise noted, content in this issue is licensed under a Creative Commons Attribution 3.0 License BY SA

Except where otherwise noted, content in this issue is licensed under a Creative Commons Attribution 3.0 License BY SA

My monthly report covers a large part of what I have been doing in the free software world. I write it for

My monthly report covers a large part of what I have been doing in the free software world. I write it for